Is Snapchat’s My AI Feature Safe for Kids?

Snapchat My AI safety is becoming a growing concern as more kids interact with AI chatbots inside social media apps. Designed to answer questions and chat like a friend, My AI adds a new layer to how children interact online, one that raises important questions about safety, privacy, and age-appropriate use.

What is Snapchat’s My AI Feature?

My AI is a built-in chatbot on Snapchat powered by artificial intelligence. It appears as a Chat screen, and feels like a personal conversation. It is automatically pinned at the top of the screen and needs to be manually unpinned by users to function like a regular chat at the bottom of the screen.

My AI functions as a friend or a person that users can talk to, using AI algorithms to tailor appropriate responses to users’ queries and statements. It can tell jokes, answer queries, give restaurant recommendations, plan trips, and maintain basic conversations with its users.

Why is My AI Popular with Kids?

Unlike traditional social media interactions, AI chatbots can respond instantly, offer advice, and encourage ongoing conversations, making it easier for kids to rely on them without fully understanding their limitations.

AI chatbots like Snapchat’s My AI are designed to mimic natural conversation. They reply immediately, remember previous interactions, and adjust their tone to feel friendly and non-judgmental. For children and teens, this can feel easier and safer than talking to a real person, especially when asking awkward, emotional, or curiosity-driven questions.

Another key reason for their popularity is perceived authority. AI responses often sound confident and well-structured, which can make it difficult for young users to recognize when the information is incomplete, biased, or inappropriate. Since children are still developing critical thinking skills, they may struggle to distinguish between helpful guidance and AI-generated speculation.

Finally, AI chatbots reduce social risk. There is no fear of embarrassment, rejection, or judgment. While this can feel comforting, it also means kids may share more personal information or rely on the chatbot instead of seeking guidance from parents, teachers, or other trusted adults.

Why Snapchat My AI Safety Matters for Parents

The growing popularity of AI tools embedded in social apps deserve closer attention from parents, especially when they are marketed as friendly companions rather than limited digital tools. These concerns make Snapchat My AI safety an important topic for parents of teens and younger users.

Snapchat My AI Safety Concerns Parents Should Know

Many have been quick to point out the risks behind AI chatbots. Firstly, there’s the issue of information accuracy – it is common for AI tools to give inaccurate or hallucinated responses. Additionally, there’s the issue of data privacy and security, where chatbots may collect an uncomfortable level of information about users. Lastly, there is a parasocial dimension. The AI responds instantly and conversationally. This can make it feel like a convenient “friend” or advisor. Kids may begin to treat it as such.

Inappropriate or misleading responses

One key concern is that My AI may generate inaccurate, oversimplified, or unsafe advice. AI hallucinations are when AI-based Large Language Models (LLMs) give inaccurate or meaningless responses. It is a phenomenon seen in most LLMs online. OpenAI’s GPT o3 model, for example, was observed to hallucinate a third of the time, while its o4 model was observed to hallucinate 48% of the time. With My AI based on OpenAI’s GPT models, it is likely that it is also prone to similar pitfalls.

Additionally, the chatbot may also give advice or engage in conversations that are inappropriate, even unsafe. Take this example of an experiment in which My AI encouraged an apparently 15-year old girl to go on a date with a 30-year old man and generated further advice on hiding signs of domestic abuse.

Privacy and data concerns

Another concern is My AI’s default access to location data and use of personal conversations for personalization. In 2023, the UK almost banned the feature due to its judicious use of personal data. Snapchat amped up its regulations, leading to a conclusion of the investigation in the app’s favour. However, My AI still has access to users’ location and personal data unless these features are manually disabled. Crucially, even when these features are disabled, it takes a while to kick in and it may even take up-to 30 days for the data to vanish from Snapchat’s servers. One of the biggest Snapchat My AI safety issues for families is how personal data and location information are handled.

Emotional Dependence Risks in Snapchat My AI Safety

Finally, children may form an emotional dependence on AI chatbots. This is concerning as AI often lacks judgement, empathy, and real-world context. This emotional attachment highlights serious Snapchat My AI safety risks for children who may struggle to separate AI responses from real support.

The ELIZA effect illustrates the innate tendency of humans to anthropomorphise and form an emotional attachment to interactive programs. Such an effect may be particularly pronounced in children and teenagers. In this 2007 study, for example, Austrian researchers observed children interacting with a robotic dog named AIBO. The children treated AIBO as a sentient companion, believing it could feel sadness or happiness. Most of the children further indicated signs of emotional attachment to the dog, accepting it as a playmate.

Such cases of attachment to machines could foster emotional dependency on such systems. This can affectchildren’s communication skills and ability to form genuine human bonds. Overreliance on AI interactions could further lead to a sense of isolation and detachment from reality. Recently, in Orlando, a 14-year old boy reportedly grew highly attached to an AI avatar on Character.AI, eventually taking his life. His parents subsequently sued the company, accusing its chatbot of worsening their son’s depression and encouraging his suicide.

Is My AI Appropriate for Different Age Groups?

Snapchat is available to anyone age 13 and above, with 20% of its userbase between the ages of 13-17. In teen terms, this is a wide range and there is a different risk level involved for different ages. While a 16 or 17 year old may very well understand the risks and limitations of AI chatbots, a 13 or 14 year old may not. This is because older teens are often more mature and have a more advanced understanding of digital literacy. Age plays a major role in Snapchat My AI safety, as younger teens may lack the digital literacy needed to assess AI responses critically.

Thus, before moving onto the rest of the article, it is important to stress the importance of age-appropriate boundaries. What may be a suitable boundary for a 13 year old may not be so for a 17 year old. As teens mature, easing digital monitoring can build trust and support independence.

What Controls Does Snapchat Offer to Parents?

The good news is that there are ways to mitigate the risks of Snapchat’s My AI, even with the app. Parents concerned about privacy can manually deny the chatbot access to location. Both short-term and long-term personal data collected by the bot can also similarly be deleted. These solutions, however, do come with certain limitations. For example, it can take some time for the disabling of location services to kick in and up-to 30 days for personal data to be fully cleared. Furthermore, they do not address the risks of information inaccuracy and parasocial behaviour. Crucially, My AI cannot be deleted without Snapchat Premium, a fact that has drawn much criticism from users.

How Parents Can Talk to Kids About AI Chatbots

It is crucial, in today’s day and age, for parents to talk to their children about what AI is and what it is not. Parents can rationally explain to them the various risks associated with the tool. This article by Mobicip can guide parents in the process. It is important to encourage kids not to share their personal information with and to question the advice given to them by AI. Parents must emphasise that AI is neither a replacement for trusted adults or for good friends. When seeking serious support, kids must know that it is unwise to rely on AI.

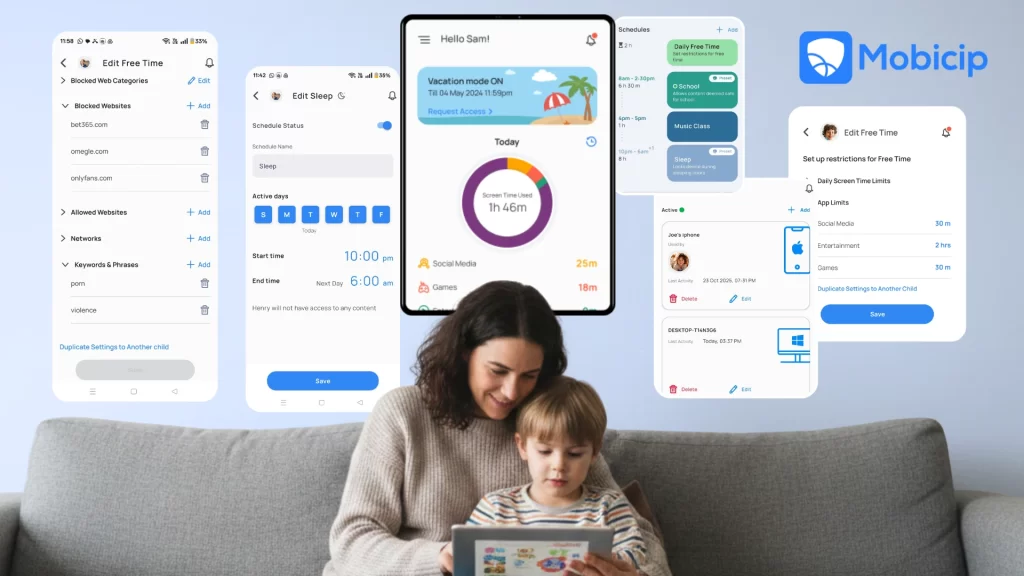

How Mobicip Helps Parents Manage AI-Based Features

Mobicip can not only help parents manage AI-based features in various apps but help them and their kids maintain a sense of digital discipline. It can do so by:

- App Blocking and Scheduling: Allows parents to block or limit access to apps that include AI chatbots, such as Snapchat, during specific times or entirely if needed.

- Screen Time Limits: Helps prevent excessive reliance on AI-driven interactions by setting daily screen time caps across devices and apps.

- Web Content Filtering: Filters inappropriate, misleading, or harmful online content that children may be directed to through AI-generated links or suggestions.

- Activity Monitoring and Reports: Provides insights into app usage patterns, helping parents spot overuse or sudden increases in time spent on AI-enabled platforms.

- Custom Profiles by Age: Allows parents to tailor restrictions based on a child’s age and maturity level, which is especially important as AI features behave differently for younger users and teens.

- Cross-Device Coverage: Ensures consistent rules across phones, tablets, and computers, so AI-based features can’t be accessed unchecked on secondary devices.

- Conversation Starters for Families: Supports healthier digital discipline by giving parents the context they need to talk with kids about AI limitations, privacy, and safe online behavior.

Conclusion: Staying Involved as Technology Evolves

From Instagram’s litany of AI chatbots to X’s Grok, AI in apps – including ones used by kids – is a phenomenon that has become commonplace and one that is unlikely to vanish anytime soon. Parents don’t need to understand everything – but they do need to stay engaged. While interfering too much with the personal and online lives of children could backfire, educating them digital safety and inculcating in them a balanced approach to the online world can make a world of difference. Ultimately, Snapchat My AI safety depends on awareness, age-appropriate boundaries, and parental involvement. With the right conversations and controls, it is possible for families to navigate AI safely.

FAQs: Snapchat’s My AI and Kids’ Safety

1. Is Snapchat’s My AI safe for kids to use?

Snapchat’s My AI includes basic safeguards, but it is not completely risk-free for children. The chatbot can sound confident and friendly while offering responses that may not always be accurate or age-appropriate, making parental guidance important.

2. Can My AI give kids misleading or inappropriate advice?

Yes. AI chatbots do not fully understand context, emotions, or consequences. This means My AI may sometimes provide incomplete, oversimplified, or inappropriate responses that children may mistake for reliable advice.

3. What privacy risks does My AI pose for children?

Kids may share personal thoughts, emotions, or identifying details during conversations with My AI without realizing that these interactions can be stored or used to improve the system, raising concerns about data privacy and long-term digital footprints.

4. Can children become emotionally dependent on AI chatbots like My AI?

Because My AI is always available and non-judgmental, some children may turn to it for comfort or reassurance instead of real people. Over time, this can affect how they seek help or process emotions offline.

5. How does Mobicip help parents manage My AI usage on Snapchat?

Mobicip allows parents to control access to Snapchat, set screen time limits, schedule usage, and monitor overall app activity. While it cannot read AI conversations, it helps reduce overuse and supports healthier boundaries around AI-driven interactions.