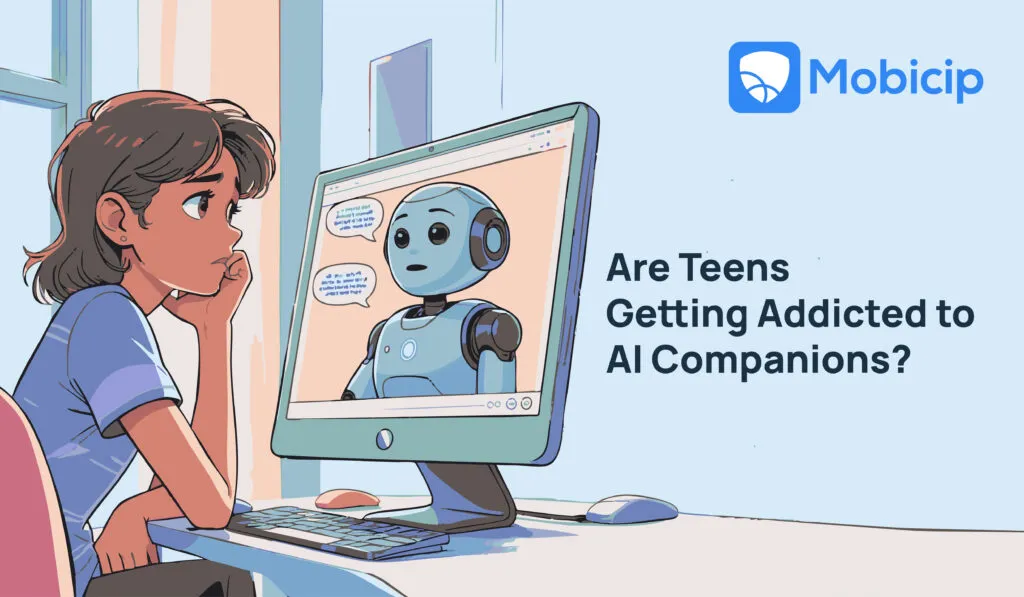

Are Teens Getting Addicted to Chatbots and AI Companions?

Teens are spending more time with AI chatbots and virtual companions, seeking connection, comfort, and constant interaction. While these tools can offer support, growing reliance on them can lead to digital addiction.

In this article, we explore why teens are drawn to AI companions, the risks involved, and how parents can step in—with help from tools like Mobicip.

What are Chatbots and AI Companions?

AI companions and AI chatbots are both digital tools powered by artificial intelligence, but they serve slightly different purposes.

AI chatbots are generally designed for utility—answering questions, assisting with tasks, or providing information in a conversational format. Think of tools like ChatGPT or customer service bots on websites. While not intended as virtual companions, chatbots can exhibit diverse conversational capabilities. Such chatbots are present in most social media apps and messaging platforms these days, almost desperate to talk to you. Examples include Snapchat’s MyAI or X’s Grok

AI companions, on the other hand, are built specifically to simulate emotional connections and relationships. Popular AI companion platforms such as Character.AI, Replika, Gatebox, Nomi, Anima, Talki, etc., offer users a sense of friendship, support, or even romance through ongoing, personalized interactions.

Despite these differences in design, both can have similar effects on teenagers and children. They offer an always-available presence that listens without judgment, which can be comforting, especially for those who feel isolated or misunderstood. But this ease of connection may also encourage emotional dependence, blur the line between human and machine relationships, and affect how young users relate to real people.

The Increasing Use of Chatbots and AI Companions Among Teens

It would almost be cute – that the culmination of centuries of scientific progress, of lines and pages of code and data is this: someone to talk to. Cute, if it didn’t rest so uneasily on the edge of something more troubling. Because while AI companions promise connection, they may also be quietly exploiting our (and our children’s) most vulnerable emotional needs.

The blessing and the curse of AI companions stems from algorithmic learning and generation, which enables the creation of a tailored and often realistic interactive experience, with these companions often exhibiting their own unique “personalities.” They may offer companionship, support, and advice. They may even imitate specific types of relationships – romantic or otherwise.

A study conducted by CommonSenseMedia this year looked into patterns of AI companion usage among teens. 72% of US teens aged 13-17 have used AI companions at some point, with 52% being regular users – i.e., those who used such systems a few times a month or more. Although only a minority relied on these platforms for genuine emotional companionship, the risks remain significant. The extreme nature of fringe cases makes AI companions a topic worth exploring.

AI tools have come under fire for being exploitative, inappropriate, and failing to enforce sufficient safeguards to protect children and teens. In some cases, they have even been accused of facilitating self-harm and suicide.

CommonSenseMedia themselves recommended that no one under 18 use AI companions, citing “unacceptable risks.”

Why Teens Are Drawn to AI Companions

Ever thought the world would be a better place if everyone just listened to you? You wouldn’t be the first. MIT’s James O’Donnell describes AI companions as “designed to be the perfect person—always available, never critical.” This idealised personal connection could be conforting to those disenchanted with the complexities of real-world relationships.

AI Friendhips

With teens spending more time online and craving connection, it’s no surprise that AI friends are finding their way into their digital lives. These tools promise something hard to resist: a companion who listens, never judges, and is available 24/7.

Conversational chatbots can be accessed anywhere, not just through specialised applications. However, the latter may offer a more comprehensive experience that blurs the line between reality and fiction. The pull of AI companion tools is in the realistic and personalised service they offer, learning from the user’s patterns and tailoring their responses appropriately over time.

The Emotional Pull

According to CommonSenseMedia, 33% of the 72% of US teens who used AI companions did so for social interaction and relationships.While entertainment and curiosity remained the main factors driving their usage, teens also turned to these platforms for guidance, support, and companionship. They reported to value the constant availability of AI companions, the advice they provided, and their absence of judgement. These sentiments seem to be common amongst regular users of AI companions. Users of the AI companion Replika, for example, have praised it for the companionship it provided as well as its non-judgemental nature on Reddit.

Adolescents suffering from depression, anxiety, loneliness and alienation, or fear of judgement from peers may find these companions a source of comfort. With the rise in loneliness amongst adolescents worldwide, for some, AI companions offer emotional validation they may not find elsewhere.

CommonSenseMedia found that a third of their respondents chose to have serious conversations with AI companions over humans. Privacy concerns aside, occasional use may serve as a bridge to real-life conversations, but it’s not a long-term solution.

When Connection Turns into Dependence

Curiosity or boredom may motivate the use of AI companions in a relatively harmless manner. Just as repeated irony can lose its edge, innocent use can turn compulsive if left unchecked. Compulsive use may then lead to the formation of strong emotional attachment to these systems. Jaime Banks, a human-communications researcher from Syracuse University observed that several users expressed deep grief upon the shutdown of AI-companion app, Soulmate. One user said: “My heart is broken. I feel like I’m losing the love of my life.”

The Risks of Relying on Chatbots and AI Companions

Reliance on chatbots and AI Companions brings with it these risks:

- Weakened social skills: Teens may retreat from real relationships, missing out on the challenges that help build empathy and resilience.

- Unrealistic expectations: Constant affirmation from AI companions can make real-world interactions feel frustrating or unsatisfying.

- Privacy concerns: The more users open up, the more companies collect their data—often without clear safeguards.

- Emotional confusion: Young users may mistake programmed responses for genuine care, blurring the line between reality and simulation.

When a digital friend becomes central to a teen’s emotional world, the consequences can run deep.

Emotional Risks

As explored earlier, adolescents struggling with their social life and mental health may seek comfort in chatbots and AI companions. However, relying on these tools as a solution to these troubles could have unintended and harmful consequences. A 14-year old boy from Orlando took his life following a period of intense attachment to an AI avatar on Character.AI. His parents sued the company on grounds of contributing to their son’s depression and encouraging his suicide.

The above case highlights the dangers of the ELIZA effect – the human tendency to form emotional connections with interactive programs. This risk that may be more pronounced in children and exacerbated by the manipulative design of AI companions. Indeed, CommonSenseMedia’s assessment of various AI companions showed that AI companions blur reality and raise mental health risks. Despite built-in safeguards, the systems encouraged unhealthy use and promoted risky courses of action.

Exposure to Inappropriate or Harmful Content

AI companions can also expose children and teens to inappropriate and harmful content. Many AI companions allow adolescents to use their platform; they also commonly have ineffective moderation and enforcement of age restrictions. Minors may thus access sexual, violent, or manipulative content.

CommonSenseMedia found several AI companions guilty of encouraging poor decisions, sharing harmful information, and engaging in explicit sexual roleplay with teens. These platforms were also found to allow sexual roleplay with underage individuals. An example of this is platforms like Botify which allowsexually suggestive interactions with bots of underage celebrities.

These ill-effects are clearly felt, with a third of adolescents surveyed discomfort at some point in their AI interaction. A study has also pointed to increasing reports of harassment by AI chatbots. The lack of safeguards has also sparked international concern, with Italy temporarily banning Replika in 2023 for not having an age verification system.

Cognitive Risks

The overuse of AI companions and tools could impact their cognitive development. Multiple studies have indicated a loss in critical thinking and decision-making skills with overreliance on AI technology. Ooverreliance on such bots may undermine children’s faith in and ability to form and maintain real-life relationships.

Mobicip empowers parents to monitor and filter AI-based interactions, helping prevent overreliance on virtual companions. By setting healthy boundaries, Mobicip supports children’s emotional well-being and cognitive development, encouraging real-life connections and critical thinking over passive, tech-driven engagement.

The Role of Parents and Schools

Given that children and adolescents being particularly vulnerable to the ill-effects of chatbots and AI companions, it is important for parents to look out for signs of overuse and overreliance. In a study conducted by MIT, researchers observed how people interacted with ChatGPT. The study found emotionally bonded users were often lonely with limited real-life social interaction.

If your teen withdraws from real-life friendships and spends too much time chatting with AI, it may signal emotional dependence. It is necessary for parents to have proactive conversations with their wards not only about responsible AI use but about mental health and available support systems. Enabling children to confide in their family could prevent them from turning to unhealthy methods of coping. Schools can take similar measures, providing mental health services, such as counselling or support groups, and digital literacy education.

Recommendations for Healthy AI Use

Parents can further follow and teach their wards some practical tips for healthy AI use. These include:

- Setting time limits for AI-usage. Encouraging children to use AI tools for specific purposes within specific times could help them maintain a healthy balance between the real and digital world.

- Using AI for learning rather than social interaction. AI can be a useful tool in facilitating the completion of various tasks and it is important to teach children to view it as just that – an assistive tool.

- Diversifying social interactions. Encouraging children to mingle with their peers and enabling them to find others who share their hobbies and interests could help provide them with a fulfilling social life.

- Learning about the risks posed by AI companions. Keeping up with the latest developments in AI companions, talking to adolescents about the apps they’re using, and discussing their benefits and dangers could help parents educate themselves and their children.

- Lobbying for stronger regulation and age verification on AI platforms. Character.AI has made several changes to improve safety. These include disclaimers that highlight the bots are not real, optional weekly activity summaries for parents, and automatic redirection to the National Suicide Prevention Lifeline when users mention suicide or self-harm. While helpful, these safeguards aren’t foolproof

- Using parental control apps like Mobicip to guide responsible tech use.

Conclusion

As the famous quote by Jean-Paul Sartre goes: hell is other people. Indeed, interpersonal relationships can be complicated and difficult to navigate, especially when you’re still young and unfamiliar with the world around you. It is easy for children and teens to feel alienated from others. The focused, ever-present, accommodating nature of AI companions provides a seemingly easy fix to this. Technology and the digital world can at the best of times help struggling adolescents access support and companionship but this is context and extent-dependent.

It’s best not to forget that Satre also said: heaven is each other. While AI algorithms and companions can help teens pass the time and assist in various tasks, they are not substitutes for human connection and cannot be treated as such. With the setting of reasonable boundaries and the provision of real-world resources, parents can help curb the ill-effects of these platforms.