AI Deepfake Scams Targeting Youth: How to Spot & Prevent Them

Deepfake scams targeting children and teens are on the rise, with AI-generated videos and voice clips being used to manipulate, deceive, and extort.

The popular British science-fiction series Black Mirror features an episode where a woman seeks solace in a digital reproduction of her deceased partner. The episode, titled “Be Right Back,” examines how something that mimics reality cannot replace it, but can result in delusion and vulnerability to manipulation. Deepfake tools can easily accomplish such mimicking and warping of reality today.

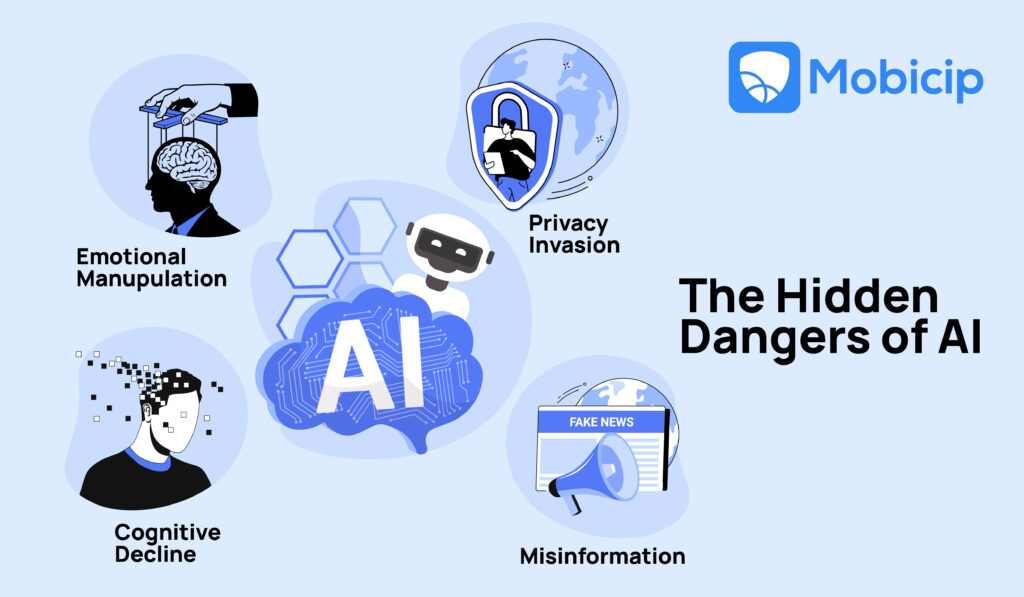

The term deepfake is a combination of the words “deep-learning” and “fake.” It refers to a type of synthetic media that uses Artificial Intelligence techniques, where neural networks manipulate existing media to match the user’s prompt. It often involves the swapping or morphing of faces, voices, and other elements of media. While some uses are harmless, deepfakes have also been exploited to spread misinformation, create explicit content, and run scams.It is crucial for parents to understand the risks and learn how to protect their children.

This article explores how scammers use deepfakes to conduct fraud, presents relevant case studies, and suggests measures to prevent and combat such behavior, including using trusted parental control solutions like Mobicip.

Understanding AI Deepfake Scams

One of the most prominent unethical uses of deepfakes is in various types of scams. People could use AI to alter images, videos, and audios for monetary gain or to promote misinformation. Identity theft is often a key feature of such scams, with deepfake technology used to manipulate or replicate their likeness. Such scams may involve:

- Generating fake media of a real-life figure endorsing various commodities.

- Generating fake media of a real-life figure promoting misinformation.

- Generating fake media of a real-life figure saying or doing things that they haven’t in order to promote misinformation. People use this technique for various purposes, most notably to further political agenda.

- Phishing scams where scammers pose as legitimate organizations or individuals to trick consumers into revealing sensitive information.

- Impersonating a real-life figure to gain access to their personal accounts and sensitive information.

Besides scams, people commonly use deepfakes maliciously to create explicit content. A 2019 Sensity AI report observes that around 90-95% of deepfake videos are nonconsensual pornography. Their 2020 report further discovered over 100,000 explicit nonconsensual images of women – some underage individuals – in existence. The use of deepfakes in cyberbullying is also a serious cause for concern.

Why Youth Are Vulnerable to Deepfake Scams

Young people are growing up in a digital world where it’s increasingly difficult to tell what’s real and what’s fake. With their deep immersion in social media and a still-developing ability to critically evaluate online content, children and teens are particularly susceptible to deception. This makes them prime targets for deepfake scams—especially when these scams exploit trust, authority, or peer influence.

The Psychological Impact of Deepfake Scams on Young Minds

Deepfake scams can harm not only a young victim’s finances or personal data but also their psychological well-being. They can be left anxious and unable to trust easily.When such an experience occurs at a young age, the emotional impact can be even more severe and long-lasting. Additionally, the use of deepfakes in cyberbullying can intensify these effects, leading to fear, shame, and significant mental health challenges.

Social media exposure increases vulnerability.With the growing influence of technology on our daily lives, several children grow up with the internet and social media playing a large role in their lives. Studies show that up to 90% of teens aged 13-17 report having used social media and while children younger than 13 are not typically allowed on social media platforms, they too make use of the internet for various activities. This increased exposure to the internet and social media increases their vulnerability to scams and misinformation.

The Lack of Media Literacy Among Youth

With such rampant use of social media and the constant bombardment of information on all sides, it is easy to be concerned about the effects poor media literacy might have on children. This study observed that 38% of US adults were not taught media literacy at school. The unquestioning acceptance of misinformation overtime erodes critical thinking ability and undermines the functioning of society.

Real-Life Examples

In 2018, American filmmaker Jordan Peele created a deepfake video of President Obama delivering a speech. He did this in order to demonstrate the danger of fake news and warn the public about the need to approach things with caution in the age of information. The technologist Aviv Ovadya, further warned of the rise of misinformation in this article. The article describes a future filled with a “a slew of slick, easy-to-use, and eventually seamless technological tools for manipulating perception and falsifying reality. People have already coined terms such as ‘reality apathy,’ ‘automated laser phishing,’ and ‘human puppets.’

Indeed, many people may be tempted to dismiss such warnings as doomsday prophesying, but it becomes difficult to ignore them when they clearly reflect reality. Find below a few real-life cases that scratch the tip of the iceberg of deepfake misinformation and fraud.

Deepfake Scam Fraud

Deepfake Example

In 2023, a Chinese businessman was swindled out of ¥4.3 million ($622,000) by scammers making use of face-swapping technology during a video call. The CEO of a UK-based energy company lost $243,000 in a deepfake voice-cloning attack where the voices of company executives were used to authorize payments. In 2024, deepfakes of Elon Musk were circulated in which he was shown to promote various fraudulent investment opportunities.

Potential Effect

Monetary Loss: Such frauds cause the victim to suffer financially. They could also cause the victim to reveal sensitive information jeopardizing their safety. Erosion of Trust: The witnessing of such scams can cause erosion of trust in legitimate institutions and services.

Deepfake Scam Cyberbullying

Deepfake Example

The mother of a teenager created deepfake media of her daughter’s cheerleading rivals engaging in explicit behavior to have them disqualified from the team.

Potential Effect

Emotional Trauma: The affected teens can suffer humiliation and be left with lasting issues pertaining to trust and self-esteem. Peer Relationships: Such actions can damage the relationships teens have with their peers and cause lasting damage to their reputations. It can also encourage and reinforce further bullying.

Deepfake Scam Misinformation

Deepfake Example

In 2019, a video of US senator Nancy Pelosi was circulated in which she appeared to be speaking incoherently. In 2022, a deepfake of Russian president Vladimir Putin was circulated in which he appeared to be declaring peace with Ukraine. A similar deepfake of Ukrainian president Volodymyr Zelenskyy was created in which he was shown to be surrendering.

Potential Effect

Political Misinformation: Such videos propagate false narratives about political figures and can morph public opinion and action. They can be especially dangerous in times of emergency. Media Literacy: The unquestioning acceptance of such videos as truth undermines critical thinking. Desensitization: An overload of fake news can desensitise people to real-world issues and conflict.

While the above cases illustrate malicious uses of deepfakes, people have also used them constructively in other instances. An example of this is a Malaria No More clip where David Beckham speaks in nine different languages, raising awareness among and appealing to the public for donations. Although such uses point to a scenario in which this technology can further public good, they raise concerns about the eventual inability of the public to distinguish fact from fiction. The dilemma of when it is ethical to use AI-generated synthetic media and in what manner one must do so is one that continues to spark public debate and controversy.

Preventing Deepfake Scams

Today, deepfakes have become a fact of life and appear commonly across the Internet. Parents can take several steps to protect themselves and their children.

Discretion

Learning to recognise deepfakes and inauthentic content goes a long way in shielding against misinformation and fraud. When examining a piece of media to ascertain its authenticity, it is generally advisable to look for the following:

Visual Inconsistencies

These include,

- unnatural facial movements,

- lip-sync errors,

- lighting distortions,

- inconsistencies in picture quality

- unnatural blurring, and

- unintentional anomalies in anatomy, environment, and perspective.

Audio Anomalies

These include robotic voices and mismatched lip movements, as well as inconsistencies in tone, intonation, and phrasing. As with checking for visual inconsistencies, it is also important to note the context of the clip itself. As a rule of thumb, it is wise to practice the following when engaging with online content and requests:

Source Verification

A good way to verify the validity of news and media is to check the legitimacy of the source. It is also important to look for secondary sources and reverse search image in question.

Re-examination of Suspicious Requests

It is always wise to be wary when receiving requests for money transfers and personal data. Verify the identity of the sender to ensure such requests aren’t fraud.

Teaching children the above at an early age can ensure their safety and inculcate a sense of social responsibility.

Legal & Ethical Measures

Reading up on local government regulations on deepfake technology is useful. The EU, for example, has a comprehensive piece of legislation known as the EU AI Act. Noting down local helpline numbers and services is also important. Several police departments world over have specific branches to deal with Cyber Crimes.

Community Vigilance

Reporting suspicious content and encouraging reporting them is advisable as is reading app guidelines and local laws on permissible content. It is important to create a safe and non-judgemental space for victims of deepfake attacks to seek assistance. Raising awareness on local avenues of assistance for such crimes is helpful.

Parental Controls & AI Tools

Parental control tools such as Mobicip can help parents keep track of their child’s online activity through features such as content filtering, real-time alerts, and safe browsing. Such tools can block or restrict access to harmful content including malicious deepfake activity and boost online safety.

The Role of AI (Tech) in Fighting Deepfake Scams

There is no discounting the role of technology in combating malicious deepfake activity. This includes actions taken by online platforms as well as tools and software developed specifically to detect and flag deepfakes.

AI-powered detection tools for Deepfake Scams

There are certain cases in which the malady itself can function as a cure. Users can deploy AI-powered tools to detect and flag deepfakes. For example, Sensity and Sentinel are two popular AI-driven software programs that detect deepfakes. People can also use similar tools to combat other AI-assisted scams. In 2024, Visa incorporated machine learning techniques in their fraud prevention processes and saved over $40 billion in losses.

Future Advancements

As deepfake technology becomes more sophisticated, AI-driven solutions for scam prevention are also evolving rapidly. Experts expect future tools to leverage multi-modal analysis—cross-checking visual, audio, and behavioral patterns—to detect manipulated content more accurately. Developers are also exploring real-time verification systems, such as digital watermarking and blockchain-based authentication, to establish the credibility of media at the point of creation. In addition, collaborations between tech companies, researchers, and law enforcement are likely to produce more robust frameworks that not only flag deepfakes but also trace their origin. These advancements will play a crucial role in building a safer digital environment, especially for vulnerable populations like children and teens.

Social Media Policies

Several social media platforms have taken steps to combat deepfake threats. X, for example, has an authenticity policy that prohibits fraud and the proliferation of misleading synthetic media on its platform. Various governments also often issue directives to social media platforms to comply with their local guidelines regarding synthetic media.

Mobicip’s Role in Protecting Children from Deepfake Threats.

Mobicip is a parental control tool designed to help families create a safe and balanced digital environment. When it comes to protecting kids from deepfake threats, Mobicip offers a range of features that allow parents to stay informed and in control.

Here’s how Mobicip can help:

- Content Filtering: Blocks websites and videos known to host deepfake content, including AI-generated pornography or scams.

- Social Media Monitoring: Tracks social media use and flags suspicious or manipulated content your child may come across.

- Real-time Alerts: Notifies parents instantly if a child interacts with harmful or inappropriate content, including deepfakes.

- Safe Browsing Tools: Enforces strict search filters and blocks access to platforms commonly used to share deepfakes.

- Screen Time Management: Limits the time kids spend on video-heavy apps, reducing exposure to manipulated content.

With Mobicip, parents don’t have to watch every click or scroll. The platform does the heavy lifting—helping families stay one step ahead in an increasingly AI-driven world.

Conclusion

With the increasing complexity and accessibility of deepfake technology, there is a growing risk of young people falling prey to digital misinformation and exploitation. Digital literacy is a crucial need in today’s world, and maintaining a healthy scepticism towards the content we consume is wiser than not. It is advisable to cultivate in children the practice of thinking critically about the world around them and verifying sources of information. Maintaining an open dialogue with them about their online experience and implementing the right social and technological measures can go a long way in empowering them to use the Internet in safe and constructive ways.

Watch Video Here.